When it comes to identifying and reporting on malvertising threats, there is one huge elephant in the room that nobody wants to address.

That elephant is “false positives.”

A false positive occurs when an ad is labeled by malvertising software as “bad” and blocked, even though it was actually a legitimate ad.

Another way to think about false positives, is as an action, or an ad, that should be allowed by a publisher’s specific threat model (e.g., you don't want that particular ad blocked), but it is blocked nonetheless.

Why are false positives such a big deal? First and foremost, this is costing you revenue!

Each and every ad labeled as bad that wasn’t, is revenue you could have had but didn’t collect. Additionally, this causes the number of threats that anti-malvertising tools report on to increase, despite the fact that the ad actually presented no threat, leading to an inaccurate measure of the volume of threats your site is actually experiencing.

So why do false positives happen, and how bad are they, really? Read on to find out.

What is the Definition of a Threat?

To start, it’s important to get on the same page with definitions.

Unfortunately, the AdTech industry has very little consistency on what the real definition of a “threat” is when it comes to malvertising. In fact, we regularly see other anti-malvertising solution providers use a far broader definition than we do. Now, we can’t necessarily speak to why this is, but we do know that using a broader definition certainly helps to show higher numbers of “threats blocked," possibly giving you a false sense of security that you are “getting what you are paying for.”

The question we should really all be asking is, were the ads that were blocked actually malicious in nature?

Here at HUMAN, we typically consider a threat to be real if we see evidence of actual malicious javascript execution. In essence, we narrow our definition from “that looks like suspicious activity” to “that is verified, documented, and confirmed to be malicious activity.”

While it seems like a small change, it is a very important distinction.

Using broader definitions for threats leads to a few problems.

Casting such a wide net is likely to catch ads that are in fact malicious, but also plenty of ads that are not, and this leads to false positives. Any ad that is flagged as malicious, but wasn’t, would be defined as a false positive.

Second, taking a broad approach to threat identification leads to overinflated threat levels. If I report a total number of blocked threats to you, but half of those threats are really false positives, it might look like I’m doing a really good job of protecting you, when in reality the numbers don’t tell an accurate story.

We see this frequently in competitive sales processes between cleanAD and other anti-malvertising software. Publishers and advertising platforms will look at the total threat numbers we're reporting and compare them directly to those reported from another solution provider. When they see higher threat numbers from our competitors, they wonder what accounts for the discrepancy.

The Problems with False Positives

Ok, so we know what false positives are, but are they really that bad? The answer is a definitive “yes.” False positives create two very big problems for publishers and advertising platforms:

- They hurt your revenue as a publisher. Yes, you read that right. Every false positive is a blocked ad that you would otherwise have been paid for. But now you’ve made nothing. Ouch. On top of it, that advertiser may now be blocked from running any creative in the future.

- They confuse your reporting. As mentioned above, false positives over-inflate the threat numbers in your reports. Having an accurate view of your threat landscape is important. Having a fake number that makes you “feel good” but is inaccurate is useless.

So basically, what false positives boil down to are inflated numbers that cost you revenue. Well, that sounds pretty terrible, don’t you think?

How False Positives Happen

False positives usually happen out of an abundance of caution, so they don’t necessarily come from a bad place.

The approach that many anti-malvertising tools use is: search a massive list of known offenders and compare aspects about the advertiser submitting the creative or the creative itself to flag potentially malicious activity.

The problem becomes, if you are relying on a huge list, you have to cast your net very wide in order to be sure you are capturing the malicious ads. This leads to capturing some good ads in your net along with the bad ones.

By contrast, cleanAD’s approach of using behavioral analysis on client-side, in real-time, waits and catches ads as they are attempting to execute malicious JavaScript (so we can be sure it is actually malicious in the first place).

An Example of a False Positive in Action

We can talk about false positives all day long, but we figured it would be easier to illustrate with a real-life example.

In this particular case, we were working with a platform partner who had our script running in addition to a competitor product. The competitor product flagged a specific ad as malicious and blocked it. The client followed up and asked us to review the creative and find out why our system didn't flag it.

The creative included an image and a snippet of JavaScript. Here’s a look at what a sanitized version of the script looked like:

What happened was this:

- The other platform was reading some of the “redirect” language in the script and automatically assuming that it was forcing a malicious redirect.

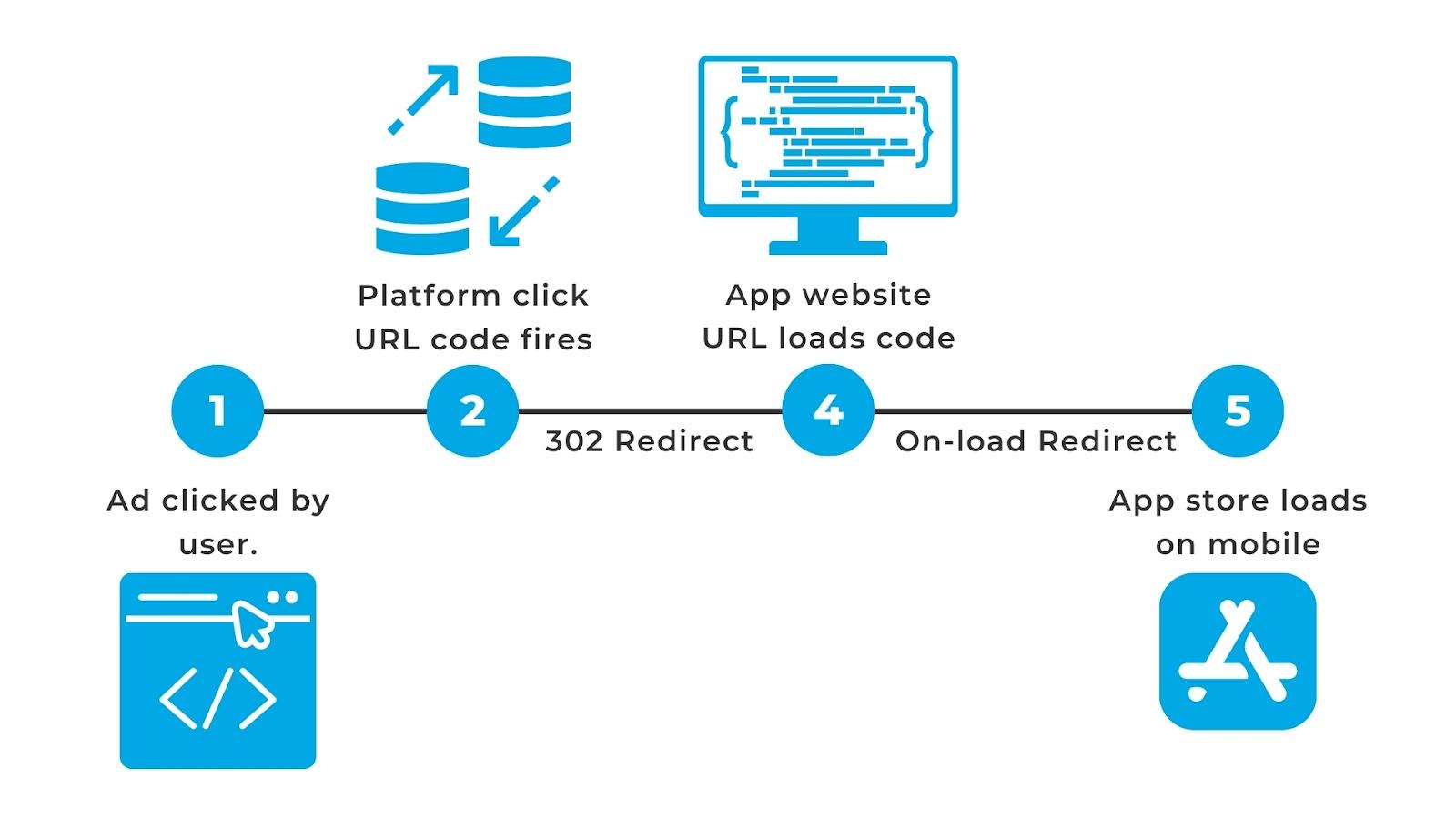

- Instead, what was actually happening is the script was detecting whether the user was on a mobile device or a desktop and choosing to direct them to download an app either from a web app store (if on a desktop) or redirecting them to the actual App Store application (if on a mobile device). The redirect to the app store only happened after the user had already clicked on the ad and was not malicious or auto-redirect.

This is an example of a blanket rule looking for certain words or code snippets, rather than behaviorally understanding what the code is actually doing, and a great illustration of where behavioral analysis can help reduce false positives.

The other platform flagged this ad, stopped it from running, and cost revenue from this advertiser. It would be easy for the platform to think this was evidence of their solution working, when in reality, it was simply a false positive.

The Bottom Line

False positives are hard to measure, but they are a costly problem. Make sure you know if the solutions you use (or are evaluating) are designed to block only confirmed threats, and prevent you from sacrificing ad revenue.